Dig Into CPU and GPU

Photo by Nana Dua

Let first recap what is CPU and GPU.

Image courtesy: researchgate

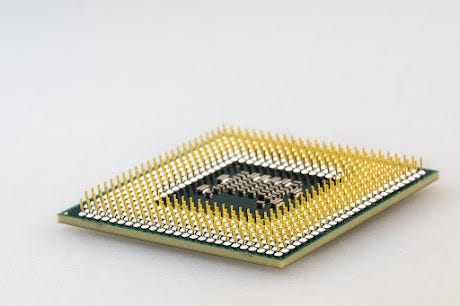

Central Processing Unit (CPU)

The Central Processing Unit (CPU) is the brain of a computer, responsible for carrying out most of the computational tasks. It’s like the conductor of an orchestra, coordinating and executing instructions from various programs and applications. CPUs are designed to handle general-purpose tasks, such as running web browsers, editing documents, and playing games. They excel at both sequential and parallel processing.

Graphics Processing Unit (GPU)

The Graphics Processing Unit (GPU) is a specialized processor designed to handle the computationally intensive tasks involved in graphics rendering and image processing. Unlike CPUs, GPUs are designed for parallel processing, capable of handling multiple instructions simultaneously. This makes them ideal for tasks that can be broken down into smaller, independent units, such as processing pixels in an image or generating 3D graphics.

Evaluation of CPUs and GPUs

CPUs and GPUs are evaluated based on different metrics:

- CPU: Clock speed (GHz), number of cores, threads per core, instructions per cycle (IPC)

- GPU: CUDA cores, memory bandwidth, transistor count, compute shader performance

Current State of CPUs and GPUs

CPUs have continued to improve in terms of clock speed and core count, enabling them to handle more demanding tasks. However, their performance gains have slowed down in recent years.

GPUs have experienced significant advancements in terms of processing power and memory bandwidth, making them increasingly powerful and versatile. Their parallel processing capabilities have made them essential for a wide range of applications beyond graphics, including scientific computing, artificial intelligence, and machine learning.

Future of CPUs and GPUs

CPUs are expected to continue focusing on improving efficiency and performance per watt, emphasizing specialized instructions and AI accelerators. GPUs are likely to see further advancements in parallel processing capabilities, memory bandwidth, and energy efficiency.

Role in AI and Futuristic Technologies

CPUs and GPUs play crucial roles in AI and futuristic technologies:

- AI: CPUs handle tasks like decision-making, planning, and reasoning, while GPUs handle the intensive computations involved in training and running AI models.

- Futuristic Technologies: CPUs and GPUs are essential for developing and running advanced technologies like self-driving cars, robotic systems, and virtual reality experiences.

CPUs and GPUs work together in Nvidia systems to provide a powerful and versatile computing platform. Here’s how they collaborate:

NVIDIA CPU and GPU Architecture

Nvidia systems typically employ a hybrid architecture that combines a high-performance CPU with a powerful GPU. The CPU handles general-purpose tasks like running applications, managing system resources, and coordinating data transfers. The GPU, on the other hand, specializes in parallel processing and excels at tasks like graphics rendering, video encoding, and scientific computing.

Data Transfer and Synchronization

CPUs and GPUs communicate with each other through a high-speed interconnect, such as PCI Express, to exchange data and synchronize their operations. The CPU prepares data for the GPU, sends it over the interconnect, and waits for the GPU to complete its processing. The GPU then sends the processed data back to the CPU or directly to the display or other output device.

NVIDIA CUDA Technology

Nvidia’s CUDA (Compute Unified Device Architecture) platform enables developers to write programs that can utilize both the CPU and GPU, leveraging their respective strengths. CUDA programs can offload computationally intensive tasks to the GPU while the CPU handles other tasks, significantly improving performance.

Examples of CPU-GPU Collaboration in Nvidia Systems

- Gaming: CPUs handle game logic, AI calculations, and physics simulations, while GPUs render 3D graphics and provide high-frame rates.

- Video Editing: CPUs manage video editing software and handle tasks like timeline manipulation and effects preview, while GPUs accelerate video decoding, encoding, and rendering.

- Scientific Computing: CPUs coordinate scientific simulations and data analysis, while GPUs perform parallel calculations and simulations, enabling faster and more complex models.

- Artificial Intelligence: CPUs handle high-level AI tasks like decision-making and planning, while GPUs accelerate the training and execution of AI models.

Nvidia’s hybrid architecture, coupled with CUDA technology, enables seamless collaboration between CPUs and GPUs, resulting in powerful and versatile computing platforms that excel in a wide range of applications.

Following are some key compute features of Tesla V100: New Streaming Multiprocessor (SM) Architecture Optimized for Deep Learning Volta features a major new redesign of the SM processor architecture that is at the centre of the GPU. New Tensor Cores designed specifically for deep learning deliver up to 12x higher peak TFLOPS for training and 6x higher peak TFLOPS for inference.

With independent parallel integer and floating-point data paths, the Volta SM is also much more efficient on workloads with a mix of computation and addressing calculations. The AI Computing and HPC Powerhouse The World’s Most Advanced Data Center GPU WP-08608–001_v1.1

Second-Generation NVIDIA NVLink™ The second generation of NVIDIA’s NVLink high-speed interconnect delivers higher bandwidth, more links, and improved scalability for multi-GPU and multi-GPU/CPU system configurations. Volta GV100 supports up to six NVLink links and a total bandwidth of 300 GB/sec, compared to four NVLink links and 160 GB/s total bandwidth on GP100. NVLink now supports CPU mastering and cache coherence capabilities with IBM Power 9 CPU-based servers. The new NVIDIA DGX-1 with V100 AI supercomputer uses NVLink to deliver greater scalability for ultrafast deep learning training. HBM2 Memory: Faster, Higher Efficiency Volta’s highly tuned 16 GB HBM2 memory subsystem delivers 900 GB/sec peak memory bandwidth.

Nvidia is the dominant player in the GPU market, but it faces competition from several companies, including AMD, Intel, and Qualcomm.

- AMD is Nvidia’s main competitor in the discrete GPU market, offering a range of GPUs for gaming, professional workstations, and data centers. AMD’s Radeon GPUs are known for their performance and value, and they have gained market share in recent years.

- Intel is a major player in the CPU market, but it is also expanding into the GPU market with its Arc GPUs. Intel’s Arc GPUs are targeting the mid-range and high-end gaming markets, and they are also designed for use in data centers.

- Qualcomm is a major player in the mobile GPU market, with its Adreno GPUs powering billions of smartphones and tablets. Qualcomm is also developing GPUs for laptops and desktops, and it is a potential competitor to Nvidia in the data center market.

In addition to these major competitors, there are a number of smaller companies developing GPUs for niche markets, such as cloud gaming and cryptocurrency mining.

Here is a table summarizing the key competitors in the GPU market:

CompanyFocusStrengthsWeaknessesNvidiaGaming, professional workstations, data centersHigh performance, strong brand reputation, large developer ecosystemHigh pricesAMDGaming, professional workstations, data centersGood performance, value for money, growing market shareLess mature software ecosystem than NvidiaIntelData centers, gamingStrong brand reputation, large manufacturing capabilities, growing GPU businessLimited experience in the GPU marketQualcommMobile GPUs, data centersStrong position in the mobile market, growing GPU businessLimited experience in the PC and data center markets

The GPU market is highly competitive, and the companies listed above are constantly innovating and developing new products to stay ahead of the curve. It will be interesting to see how the market evolves in the years to come.

Some are very interesting links below

One of the reader Oliver M S, commented on this article is below.

Btw your description of distinction between CPU and GPU is misleading. Sequential processing is not a feature CPUs excel when compared to GPUs. Modern CPUs do parallel processing all the time as well. At multiple levels.

1. by typically having multiple cores. Commonly 4…32 nowadays. Each core executes instructions in parallel.

2. then there is hyperthreading on each core. This means that in many (though not all) situations instructions from two distinct threads may get executed in parallel on one core.

3. then there is instruction level parallelism. This means that certain combinations of subsequent CPU instructions may be executed in parallel within one core and thread. Typically in the order of 2…3. This is highly variable per instructions though.

Main distinction to GPUs are:

1. GPU instruction sets are simpler and fields of use more limited than CPU instruction sets. They’re not fully general purpose like CPUs. They’re optimized for mathematical calculations.

2. Due to simpler instruction sets, each single instruction on GPU takes typically less total time to execute than on CPU.

3. although GPUs neither have hyperthreading nor instruction level parallelism, due to their simpler design per core they have massively more cores (~1000x) than CPUs. I.e. in 2023, GPUs with 1024…16384 cores are common.

Together with faster execution per instruction, this is what makes GPUs typically several 100 times or even more than 1000 times faster than CPUs.